How to create an interactive Node.js lab with Vitest?

We'll divide this part into 5 sections:

- Creating lab metadata

- Setting up lab defaults

- Setting up lab challenges

- Setting up evaluation script

- Setting up test file

Introduction

This guide would assume that you already have created an interactive course from your instructor panel. If not, go here and set it up first

Step 1 - Creating lab metadata

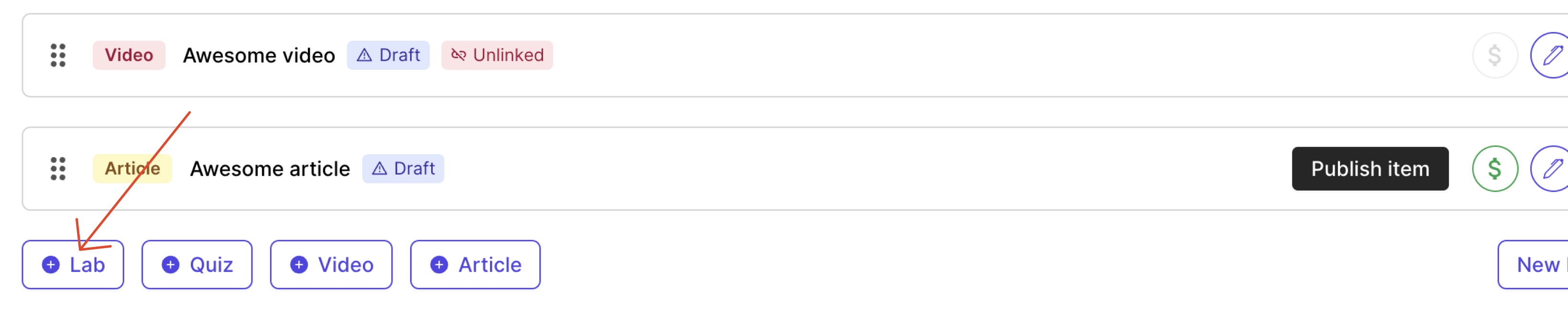

- Add a new item lab in your course curriculum page

A new lab item gets added. Click on the edit pencil button on the right. This should open the lab library widget in your instructor panel.

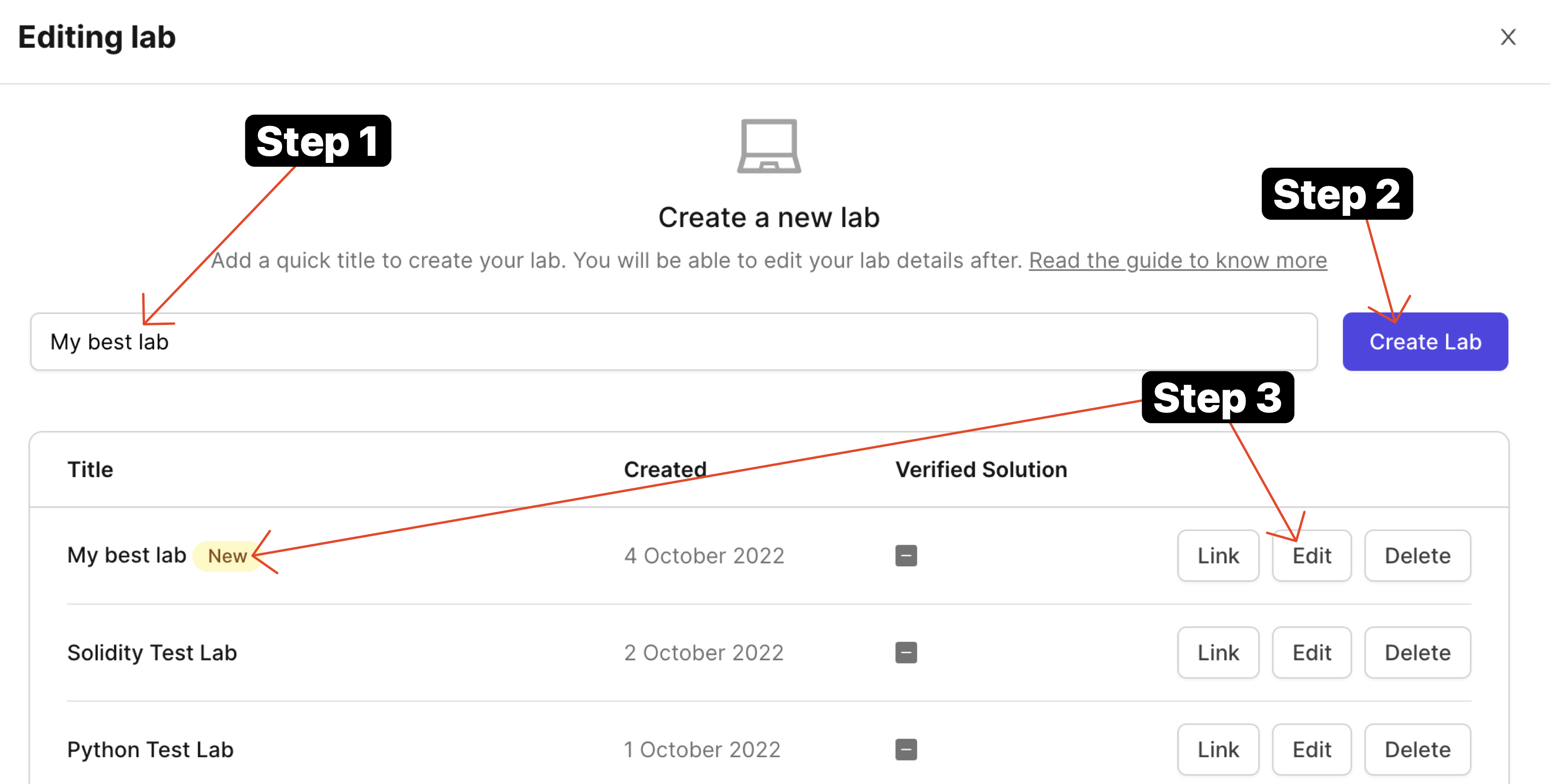

You should now be able to write a quick lab name and press on "Create Lab" button. This would create a lab you would be able to edit.

- Once it is created, click on the "Edit" button and you'll arrive at lab designer view.

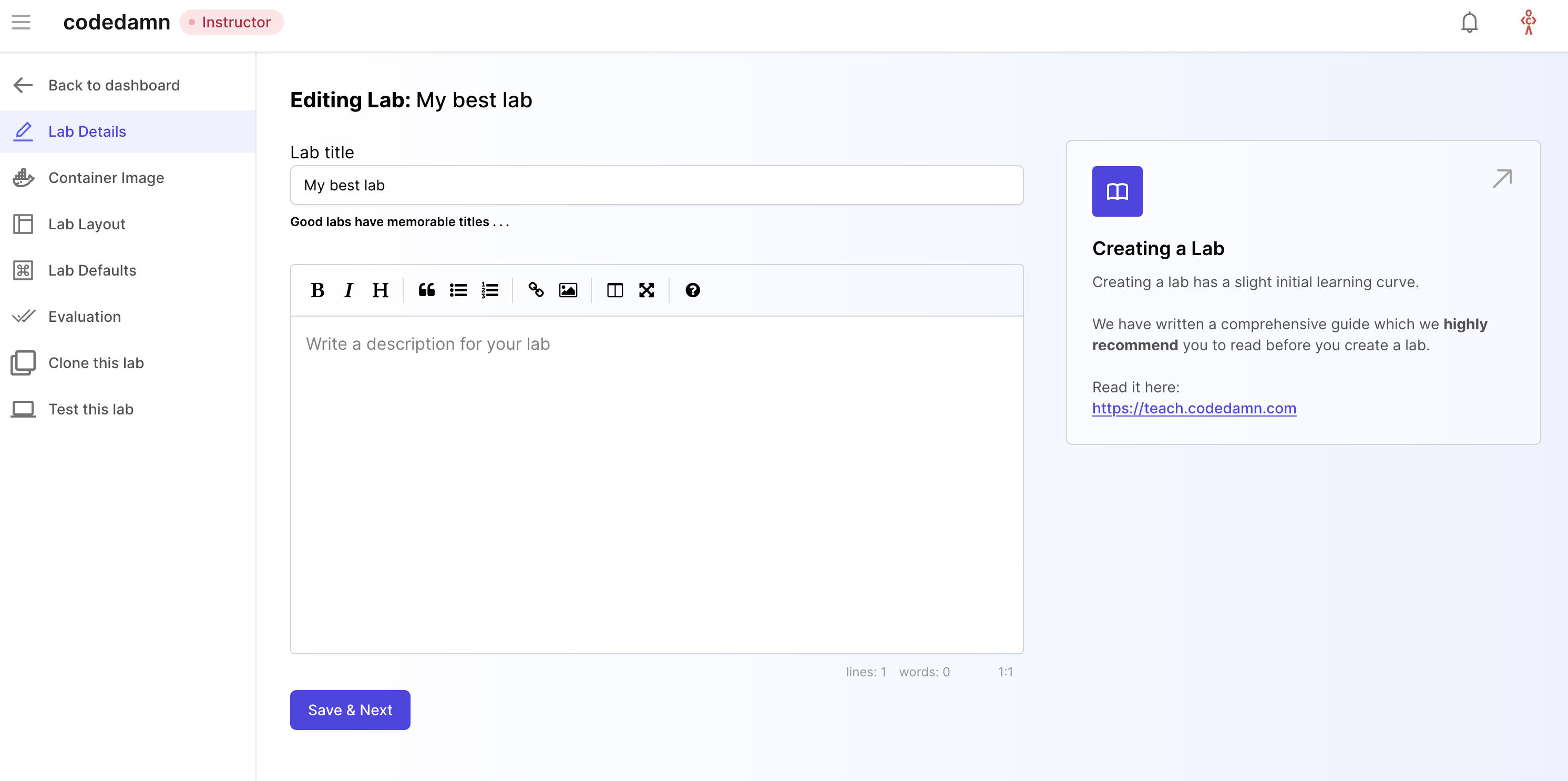

This is where you will add metadata to your labs and setup your labs for evaluation. Let's take a look at all the tabs here.

Lab Details

Lab details is the tab where you add two important things:

- Lab title

- Lab description

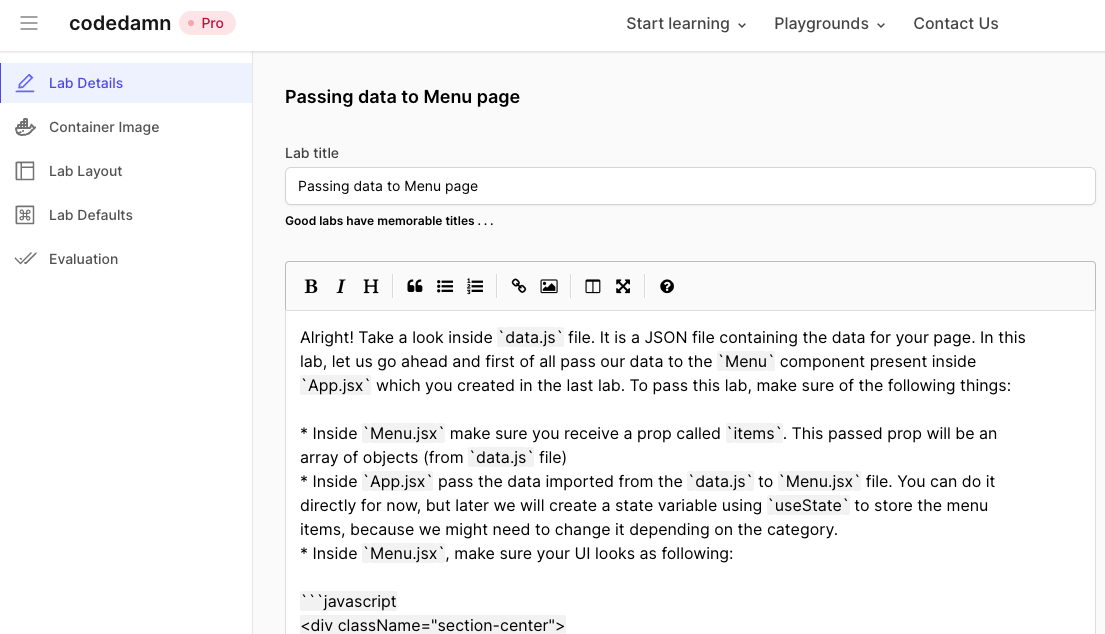

Once both of them are filled, it would appear as following:

Let's move to the next tab now.

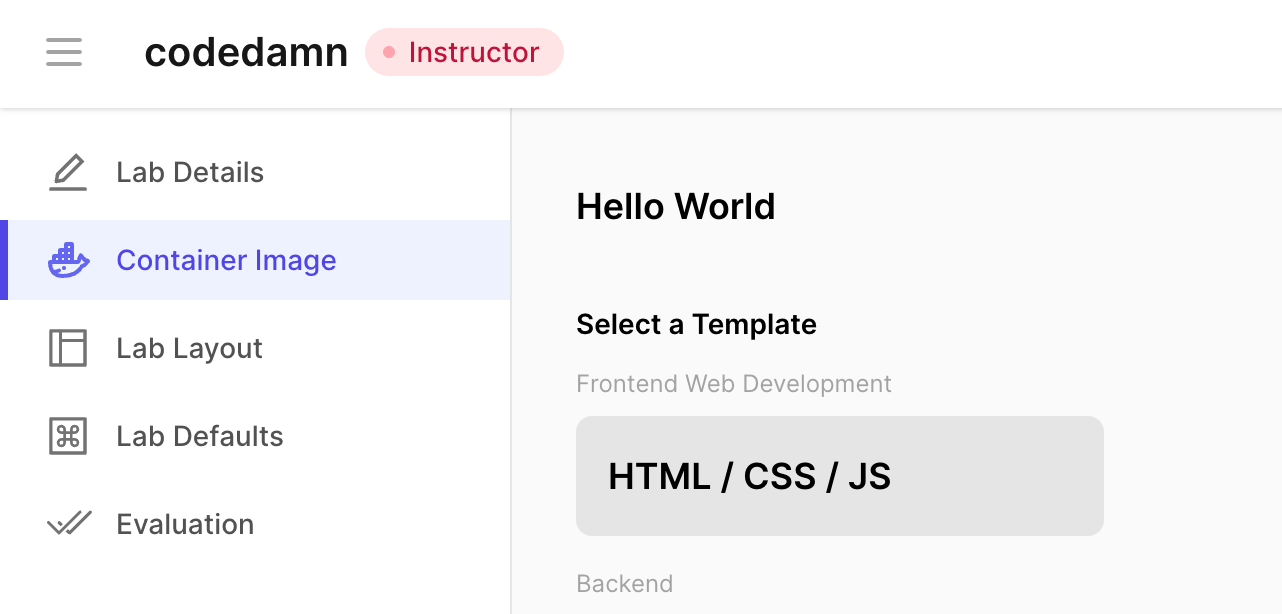

Container Image

Container image should be set as "HTML/CSS" for HTML/CSS labs. The following software are available by default in this image:

static-servernpm package installed globally: static-server- Puppeteer installation with Chrome for E2E testing (You can read about puppeteer testing here)

- Node.js v14, Yarn, NPM, Bun.js

The following NPM packages (non-exhaustive list) are globally installed:

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- @drizzle/[email protected]

- [email protected]

Lab Layout

Lab layout can be used to set a default layout in which the lab boots. We currently support the following layout types:

Terminal + IDE + Browser

This would include everything codedamn has to offer - a terminal at the bottom, an IDE in center (powered by Monaco on desktops, and CodeMirror on mobile phones), and a browser preview of (ideally) what user is working on. This is best if your playground runs a HTTP server.

Terminal + Browser

This layout is for times when you don't need IDE in place, and only want something hosted inside a browser - like a XSS challenge.

Terminal + IDE

This layout is for backend programming without website UI. This would only include a terminal and an IDE - like VS Code. For example - headless E2E testing, writing Python scripts, discord bots, etc.

Terminal only

This would not include anything, except for a terminal. Best for Linux/bash labs where you want users to exclusively work with terminals only.

TIP

You can configure the layout through .cdmrc file too. More information here

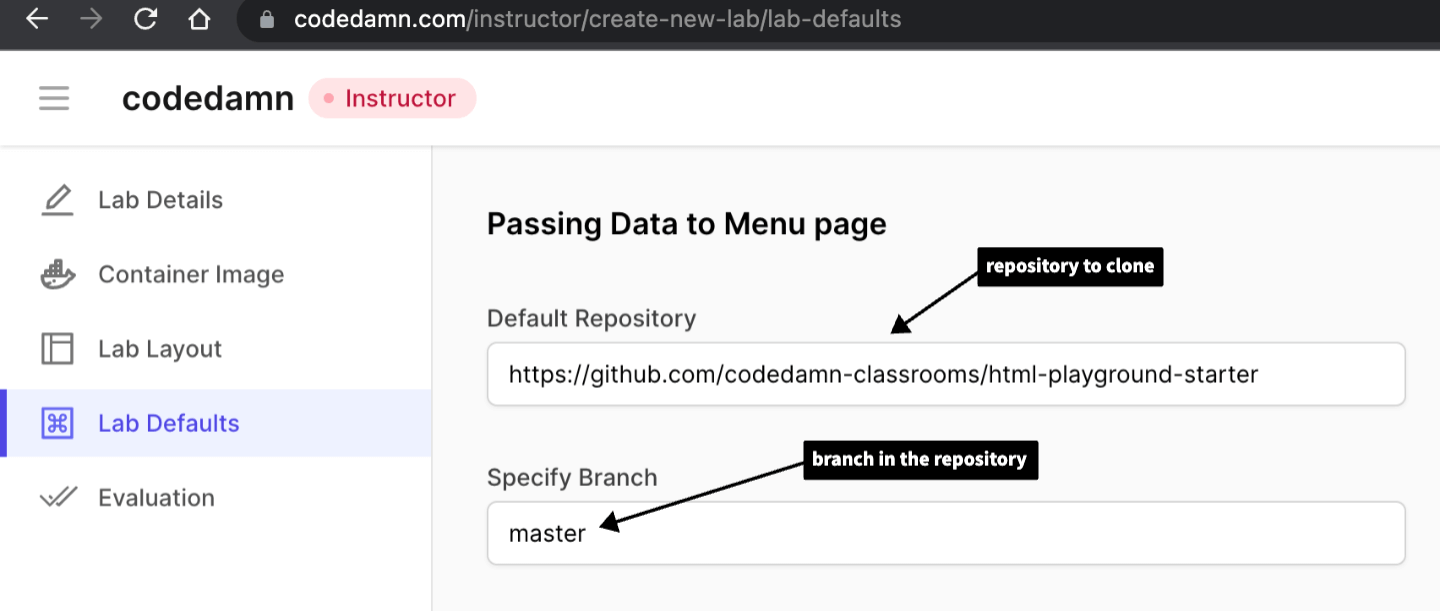

Step 2 - Lab Defaults

Lab defaults section include how your lab environment boots. It is one of the most important parts because a wrong default environment might confuse your students. Therefore it is important to set it up properly.

When a codedamn playground boots, it can setup a filesystem for user by default. You can specify what the starting files could be, by specifying a git repository and a branch name:

INFO

You will find a .cdmrc file in the repository given to you above. It is highly recommend, at this point, that you go through the .cdmrc guide and how to use .cdmrc in playgrounds to understand what .cdmrc file exactly is. Once you understand how to work with .cdmrc come back to this area.

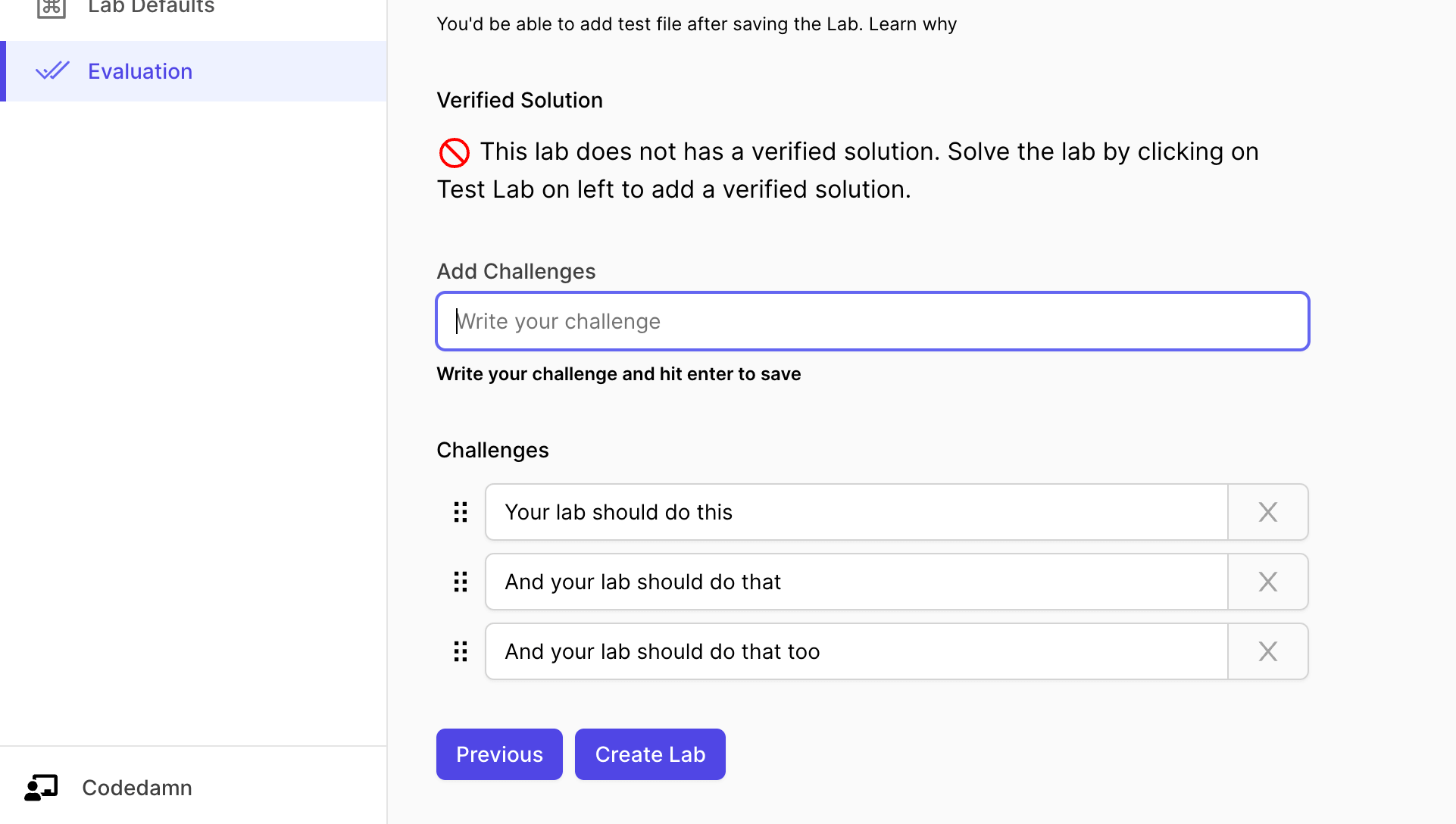

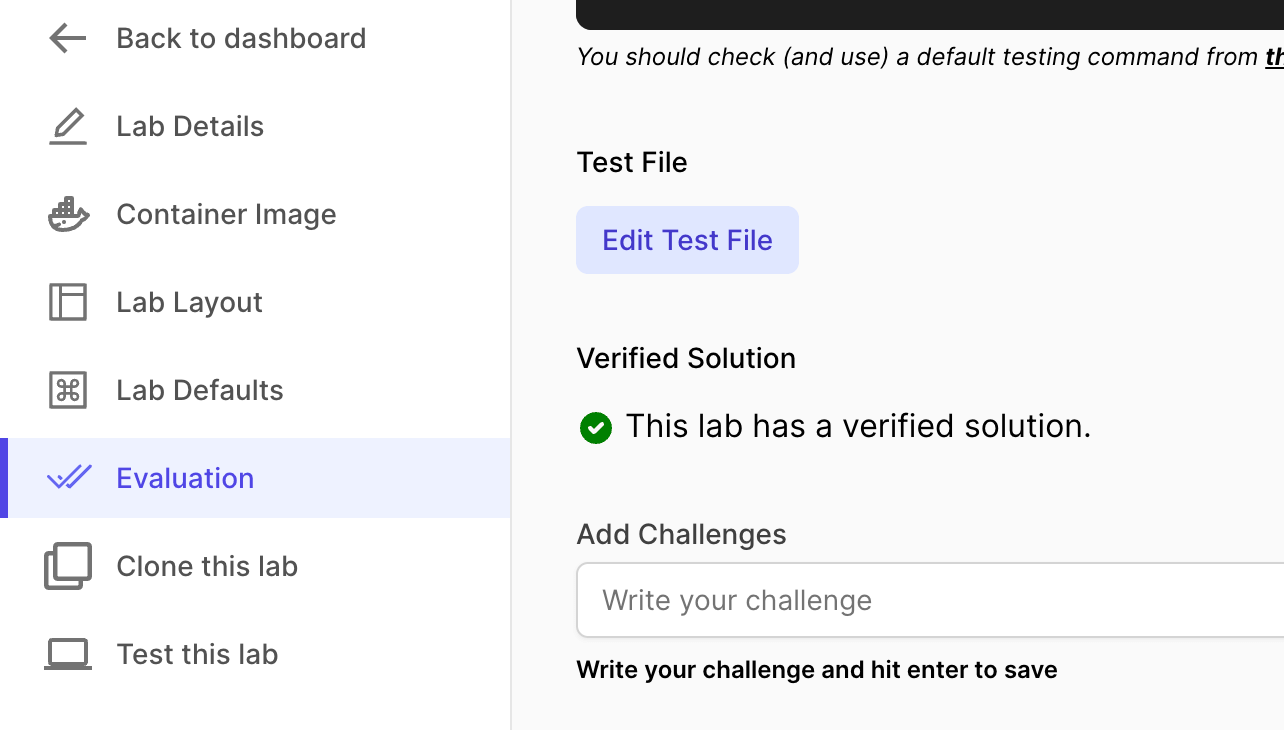

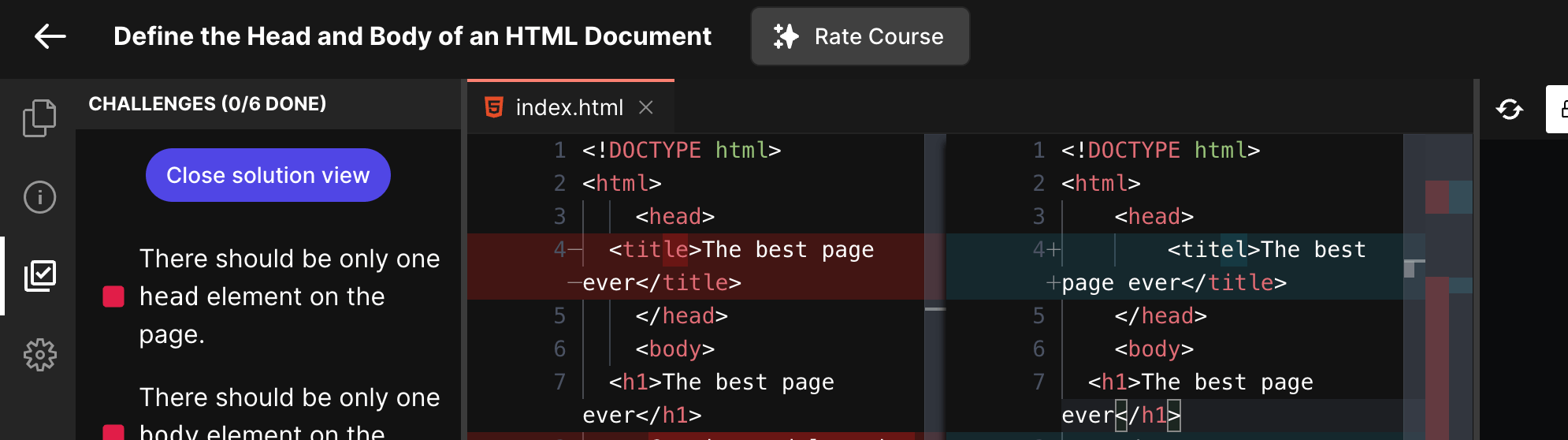

Step 3 - Lab challenges

Next step is to setup challenges and evaluation for your lab. This is the part where your learners can learn the most because they will have to pass certain challenges.

TIP

It is highly recommended for you to watch the video below to understand the architecture

This is the biggest advantage of using codedamn for hosting your course too - to make them truly interactive and hands-on for your users.

Let's start by setting up challenges.

The interface above can be used to add challenges to your lab. You can also add hints to every single challenge you put here.

TIP

When the user runs the lab but fails a few challenges, the hint for that particular failed challenge is displayed to the user.

Step 4 - Evaluation Script

TIP

HIGHLY recommended to watch the video before you read the documentation:

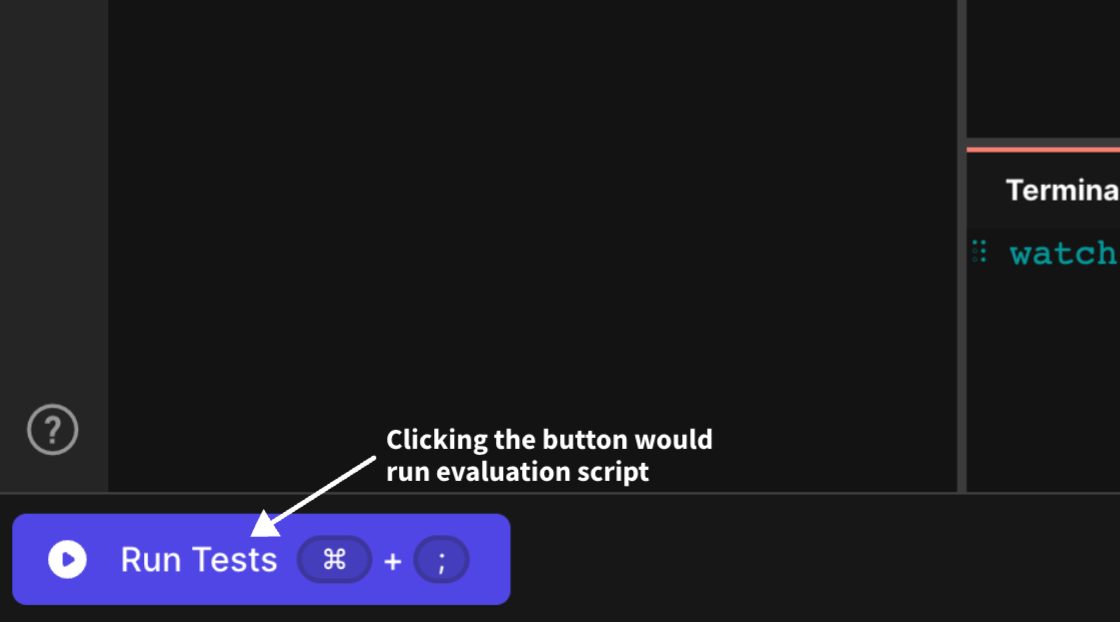

Evaluation script is actually what runs when the user on the codedamn playground clicks on "Run Tests" button.

Since we're going to test this lab using Vitest, we'll setup the Vitest testing environment. This is what your evaluation script would look like:

#!/bin/bash

set -e 1

# Install vitest and testing util

cd /home/damner/code

bun add [email protected] [email protected] @testing-library/[email protected] --dev

mkdir -p /home/damner/code/.labtests

# Move test file

mv $TEST_FILE_NAME /home/damner/code/.labtests/nodecheck.test.js

# setup file

cat > /home/damner/code/.labtests/setup.js << EOF

import '@testing-library/jest-dom'

EOF

# vitest config file

cat > /home/damner/code/.labtests/config.js << EOF

import { defineConfig } from 'vite'

// https://vitejs.dev/config/

export default defineConfig({

plugins: [],

test: {

globals: true,

environment: 'jsdom',

setupFiles: '/home/damner/code/.labtests/setup.js',

}

})

EOF

# process.js file

cat > /home/damner/code/.labtests/process.js << EOF

import fs from 'node:fs'

const payload = JSON.parse(fs.readFileSync('./payload.json', 'utf8'))

const answers = payload.testResults[0].assertionResults.map(test => test.status === 'passed')

fs.writeFileSync(process.env.UNIT_TEST_OUTPUT_FILE, JSON.stringify(answers))

EOF

# package.json

cat > /home/damner/code/.labtests/package.json << EOF

{

"type": "module"

}

EOF

# run test

(bun vitest run --config=/home/damner/code/.labtests/config.js --threads=false --reporter=json --outputFile=/home/damner/code/.labtests/payload.json || true) | tee /home/damner/code/.labtests/evaluationscript.log

# Write results to UNIT_TEST_OUTPUT_FILE to communicate to frontend

cd /home/damner/code/.labtests

node process.jsLet's understand what the above evaluation script is doing:

- We navigate to users' default code directory and install

vitestandvite. We do this because we'll usevitestas the test runner. - We then move the cloned test file (more on this in the next step) inside

.labtestsfolder. This folder is hidden from the user in the GUI while test is running. - We create a custom configuration for vite which would be read by vitest for testing.

- We then create a

process.jsfile that would read the results written by vitest and process them to write them on a file inside$UNIT_TEST_OUTPUT_FILEenvironment variable. This is important because this file is read by the playground to evaluate whether the challenges passed or failed. - Whatever your mapping of final JSON boolean array written in

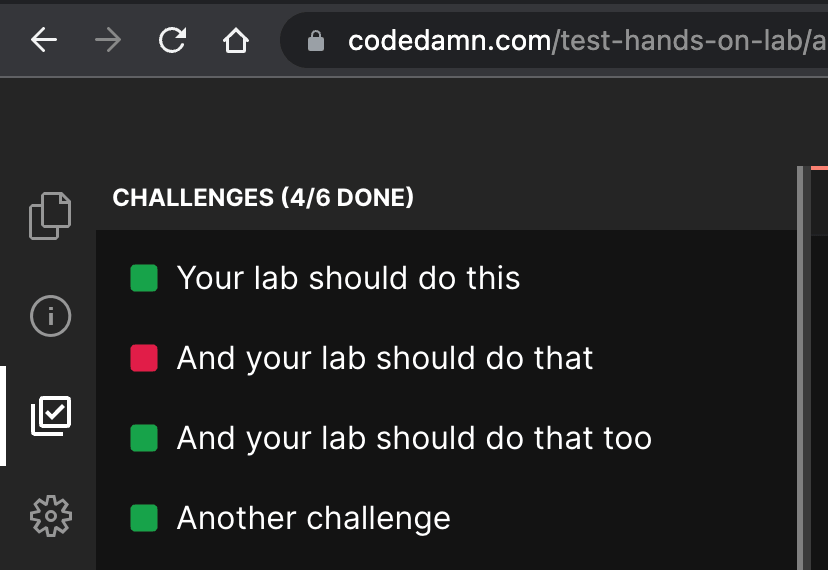

process.env.UNIT_TEST_OUTPUT_FILEis, it is matched exactly to the results on the playground. For example, if the array written is[true, false, true, true], the following would be the output on playground:

- Finally we run the test suite using

vitestwithout multithreading (as we want sequential results) and then we runprocess.jsfile using Node. This runs the test suite.

:::note You can setup a full testing environment in this block of evaluation script (installing more packages, etc. if you want). However, your test file will be timed out after 30 seconds. Therefore, make sure, all of your testing can happen within 30 seconds. :::

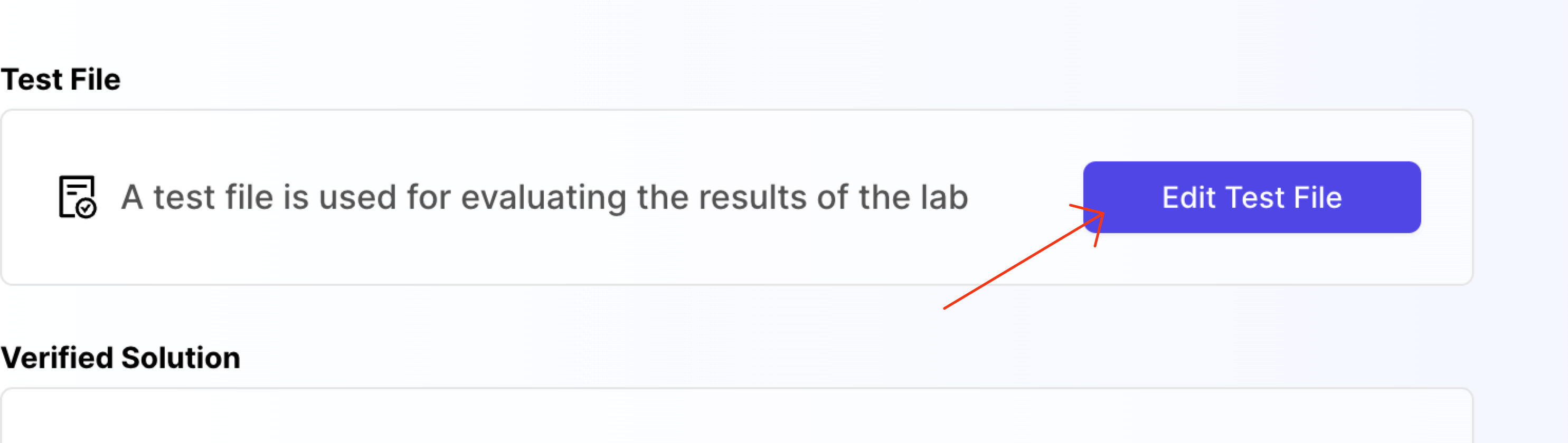

Step 5 - Test file

You will see a button named Edit Test File in the Evaluation tab. Click on it.

When you click on it, a new window will open. This is a test file area.

You can write anything here. Whatever script you write here, can be executed from the Test command to run section inside the evaluation tab we were in earlier.

The point of having a file like this to provide you with a place where you can write your evaluation script.

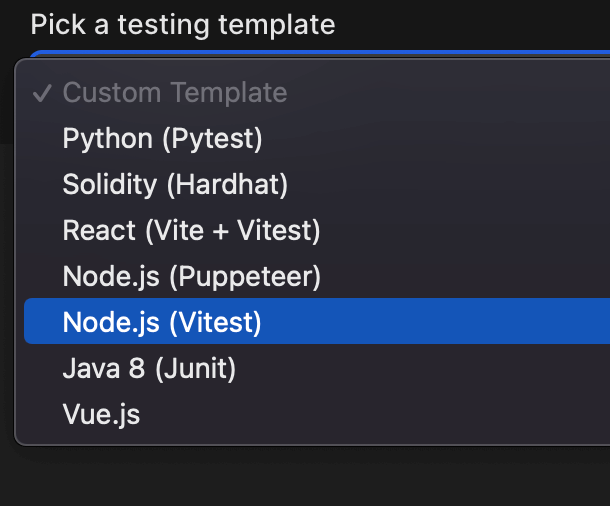

For Node.js labs tested with Vitest, you can use the default test file of Node.js (Vitest) evaluation:

The moment you select the Node.js (Vitest), the following code should appear in your editor:

describe('Test runner suite', () => {

test('Variable should be exported', async () => {

const userVariable = await import('/home/damner/code/index.js').then(t => t.default)

expect(typeof userVariable === 'undefined').to.be.false

})

test('Variable should have correct value', async () => {

const userVariable = await import('/home/damner/code/index.js').then(t => t.default)

expect(userVariable === 'Hello World').to.be.true

})

})This code is pretty self explanatory. You can do any and everything you want here. Please make sure of the following things:

- If your number of

testblocks are less than challenges added back in the UI, the "extra" UI challenges would automatically stay as "false". If you add more challenges in test file, the results would be ignored. Therefore, it is important that the number oftestblocks is same as the number of challenges you added in the challenges UI. - Use only a single

describeblock (this is howprocess.jsscript above in evaluation script section expects the output to be) - We don't have to import

describe,testandexpectbecause we're usingglobals: truein the vitest configuration above. If you don't use that you'll have to import those functions as well. - It is better to dynamically import user code (instead of using top level imports) because that way if the user file doesn't exist your whole test suite would not crash.

TIP

Read more about unit and integration testing with Vitest here: https://vitest.dev/api

This completes your evaluation script for the lab. Your lab is now almost ready for users.

Setup Verified Solution (Recommended)

Verified solution is highly recommended. To setup a verified solution for your lab, once your lab is ready, all you have to do is click on "Test lab", write code that passes your lab, and run that code once.

Once you do that, your lab would be marked a lab having verified solution. It also helps students as we can show them a Monaco diff editor showing the verified solution from the creator (you).

At this point, your lab is complete. You can now link this lab in your course, ready to be served to thousands of students 😃 Watch the video tutorial below to understand: